Google's DeepMind Artificial Intelligence Taught Itself To Play Breakout

Google's new research project dubbed DeepMind is being used for a variety of things that they can't talk about, but one of the things that they can talk about is DeepMind's affinity for doing exceptionally well at playing video games... even better than humans.

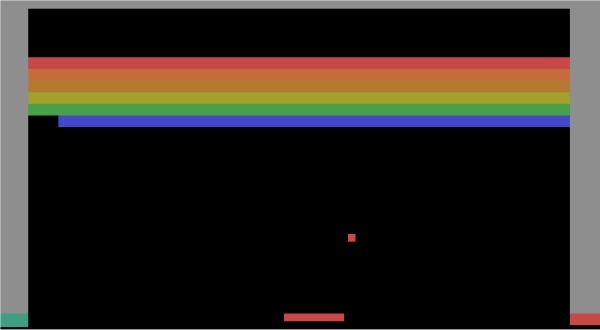

New Scientist has an interesting article up about the news-making phenomenon about an artificial intelligence called DeepMind that can play video games by learning how they operate and then responding accordingly in order to get high scores. So far, the technology has only been successfully used for Atari 2600 games like Breakout, Asteroids and other simplified, pixel-based games from the yesteryears of gaming.

In fact, after 600 games of Breakout, DeepMind finally learned how to “tunnel”, which is to use the ball to break the corners of the stage and have the ball break all the blocks from behind the stage. You can see an example in the video demonstration below.

That's very impressive for an A.I., but it takes humans a lot less than 600 games to pick up on the back-wall trick.

DeepMind co-founder Demis Hassabis was impressed with the technique nonetheless, saying...

"That was a big surprise for us," … "The strategy completely emerged from the underlying system."

Instantaneously the idea that the A.I., “figured out” how to play Breakout and how to achieve optimal success – in spite of taking 600 games to achieve that success – people began comparing DeepMind to Skynet. Because why not?

In some regards those fears do have some warranted basis, especially considering that DeepMind isn't just designed for games. According to Hassabis...

Your Daily Blend of Entertainment News

"We can't say anything publicly about this but the system is useful for any sequential decision making task," … "You can imagine there are all sorts of things that fit into that description."

I imagine DeepMind would have a long way to go before pulling off a Terminator-sized “Judgment Day” event given that it's still taking a while just learn simple, single-plane Atari 2600 titles. Yes, there are a lot of complexities involved with an A.I., to learn to play a game without being taught, but there's a very limited scope of success in many 2D titles, especially the ones on the old Atari.

I'll be impressed when DeepMind graduates to the NES and can beat something like Double Dragon II or Ninja or Rad Racer. I'll elevate that admiration when they can get DeepMind to start playing SNES and Sega Genesis titles – mastering and learning how to organically play Super Mario World, Sonic The Hedgehog or Final Fantasy V. And beyond that, I'll start showing signs of fear and trepidation when DeepMind can master Super Mario 64, Gran Turismo and Katamari Damacy. Once DeepMind can conquer Gran Theft Auto V without dying, I'll be truly terrified.

Goldsmiths' Michael Cook from the University of London is excited about the possibilities of the technology, stating...

"It's anyone's guess what they are, but DeepMind's focus on learning through actually watching the screen, rather than being fed data from the game code, suggests to me that they're interested in image and video data,""There's a part of me that hopes that Google views DeepMind as an opportunity to simply research something for the fun of it, monetisation be damned,"

I tend to doubt that something as complex and sophisticated as DeepMind would be designed for the sole purpose of playing video games with no monetization in sight, but I'm sure thinking that it's nothing more than a harmless Atari 2600 addict might help some people sleep easier at night.

Staff Writer at CinemaBlend.